Connect Apache NiFi to ClickHouse

Apache NiFi is an open-source workflow management software designed to automate data flow between software systems. It allows the creation of ETL data pipelines and is shipped with more than 300 data processors. This step-by-step tutorial shows how to connect Apache NiFi to ClickHouse as both a source and destination, and to load a sample dataset.1. Gather your connection details

To connect to ClickHouse with HTTP(S) you need this information:

The HOST and PORT: typically, the port is 8443 when using TLS or 8123 when not using TLS.

The DATABASE NAME: out of the box, there is a database named

default, use the name of the database that you want to connect to.The USERNAME and PASSWORD: out of the box, the username is

default. Use the username appropriate for your use case.

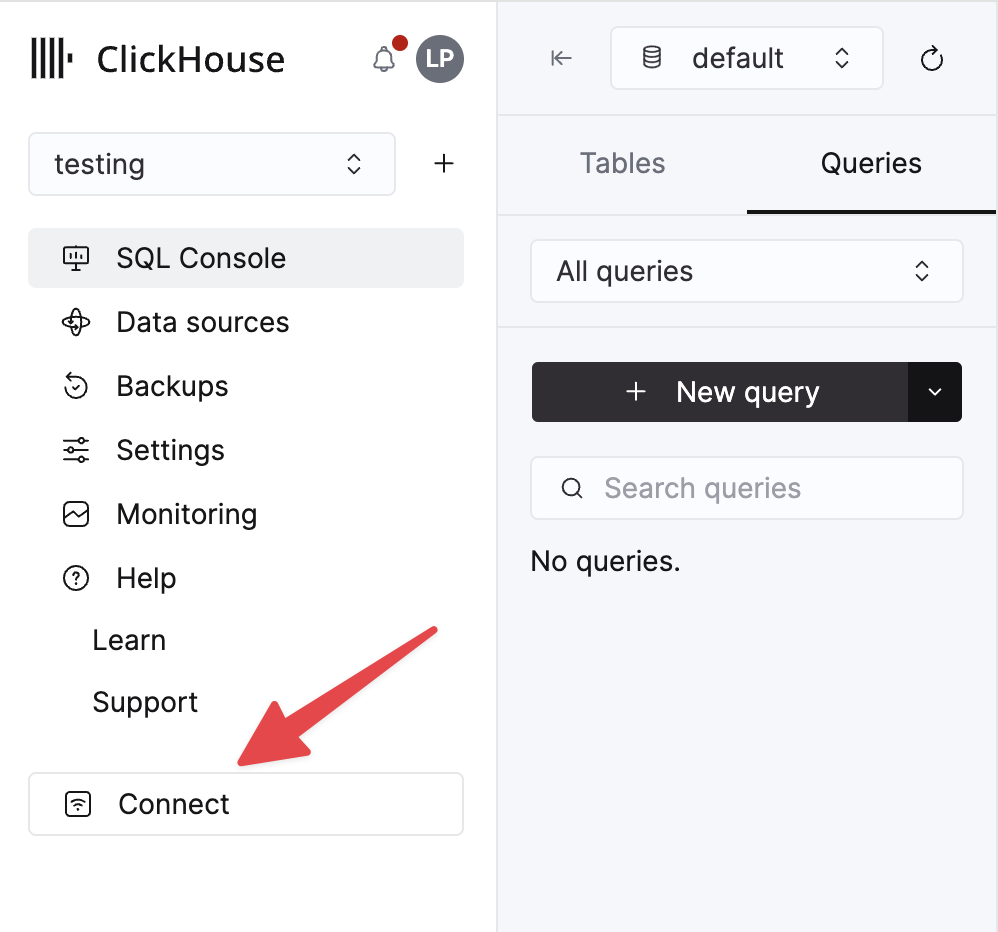

The details for your ClickHouse Cloud service are available in the ClickHouse Cloud console. Select the service that you will connect to and click Connect:

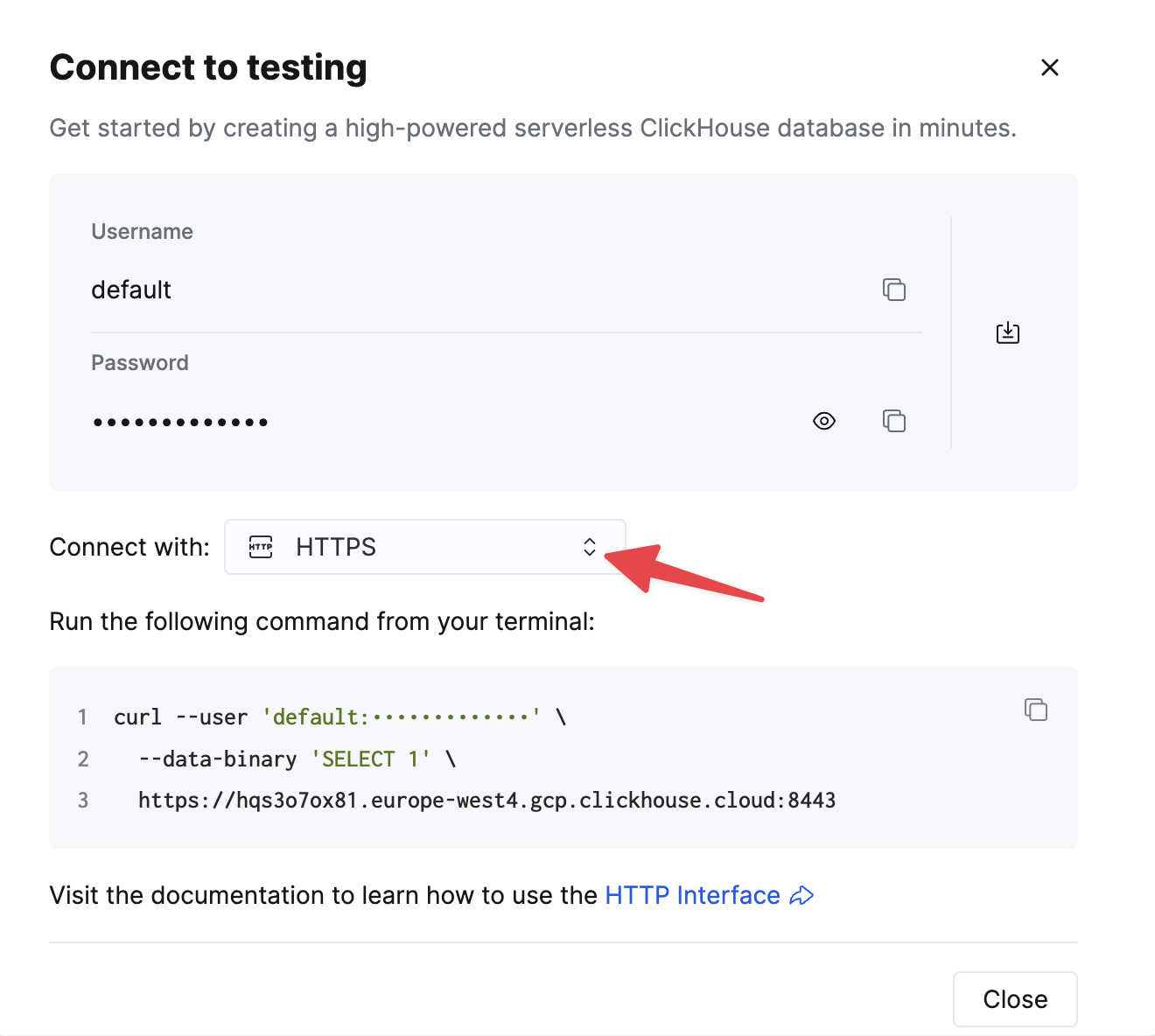

Choose HTTPS, and the details are available in an example curl command.

If you are using self-managed ClickHouse, the connection details are set by your ClickHouse administrator.

2. Download and run Apache NiFi

- For a new setup, download the binary from https://nifi.apache.org/download.html and start by running

./bin/nifi.sh start

3. Download the ClickHouse JDBC driver

- Visit the ClickHouse JDBC driver release page on GitHub and look for the latest JDBC release version

- In the release version, click on "Show all xx assets" and look for the JAR file containing the keyword "shaded" or "all", for example,

clickhouse-jdbc-0.5.0-all.jar - Place the JAR file in a folder accessible by Apache NiFi and take note of the absolute path

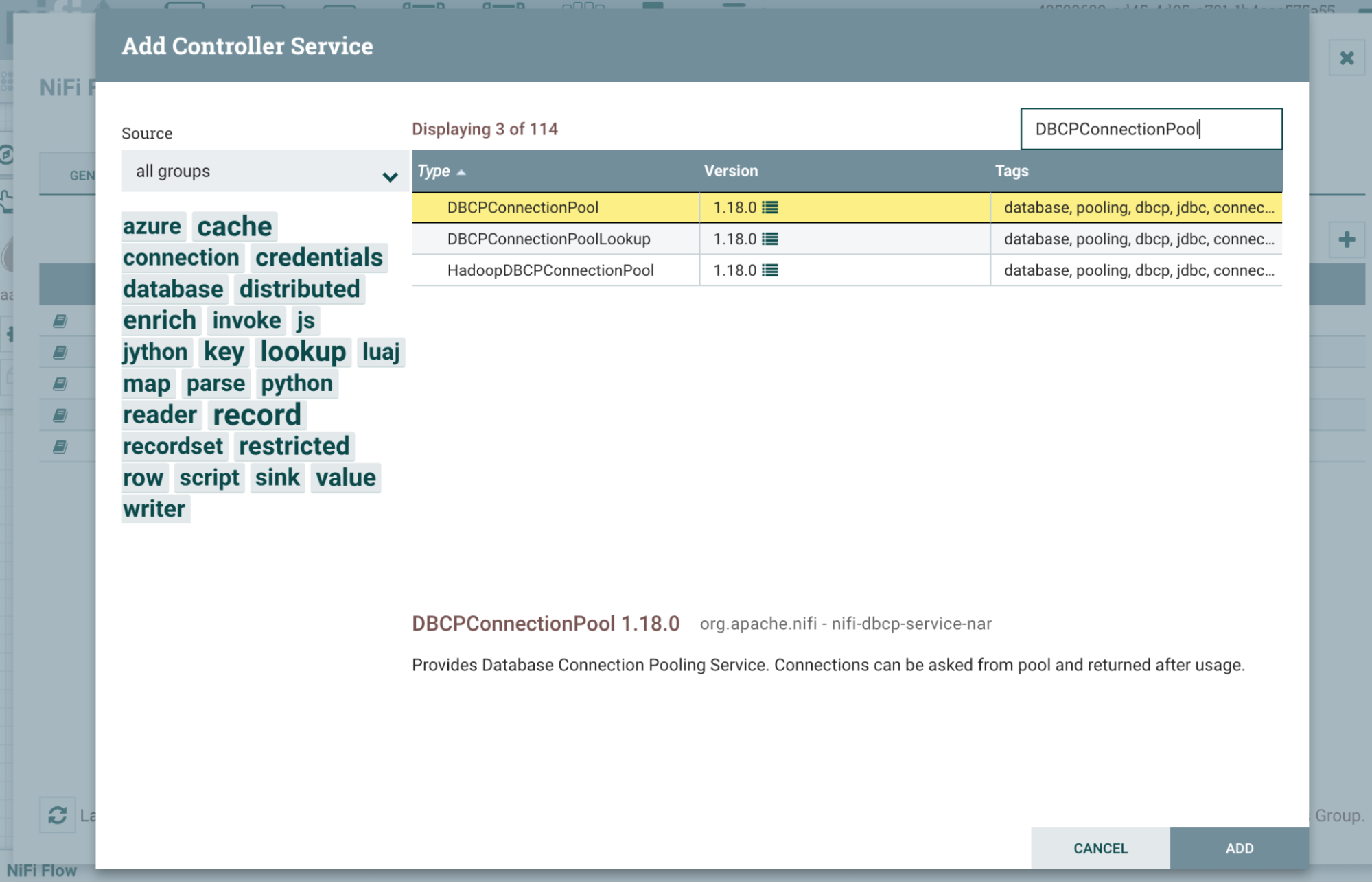

4. Add DBCPConnectionPool Controller Service and configure its properties

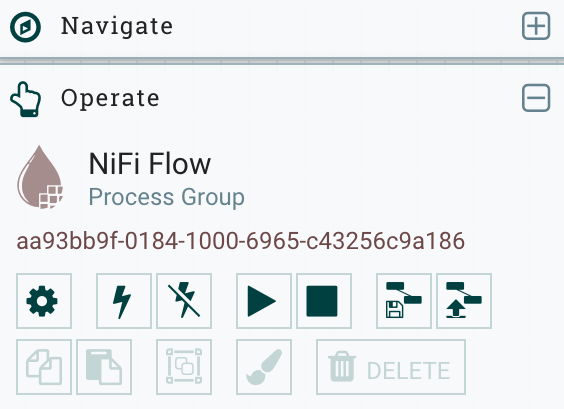

To configure a Controller Service in Apache NiFi, visit the NiFi Flow Configuration page by clicking on the "gear" button

Select the Controller Services tab and add a new Controller Service by clicking on the

+button at the top right

Search for

DBCPConnectionPooland click on the "Add" button

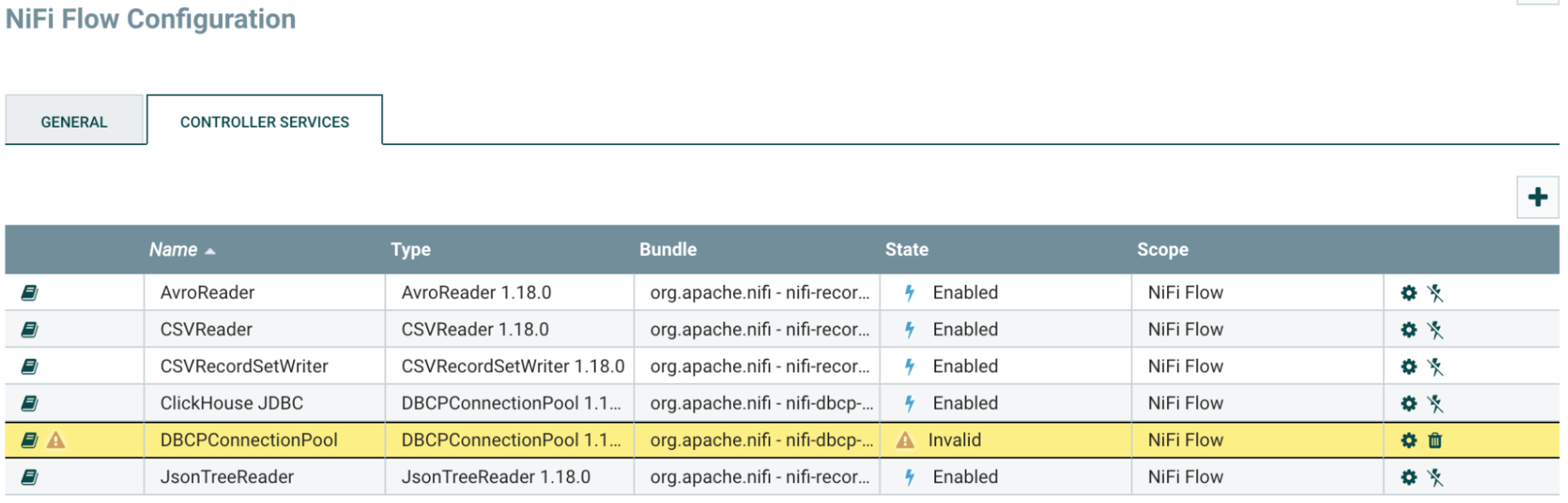

The newly added DBCPConnectionPool will be in an Invalid state by default. Click on the "gear" button to start configuring

Under the "Properties" section, input the following values

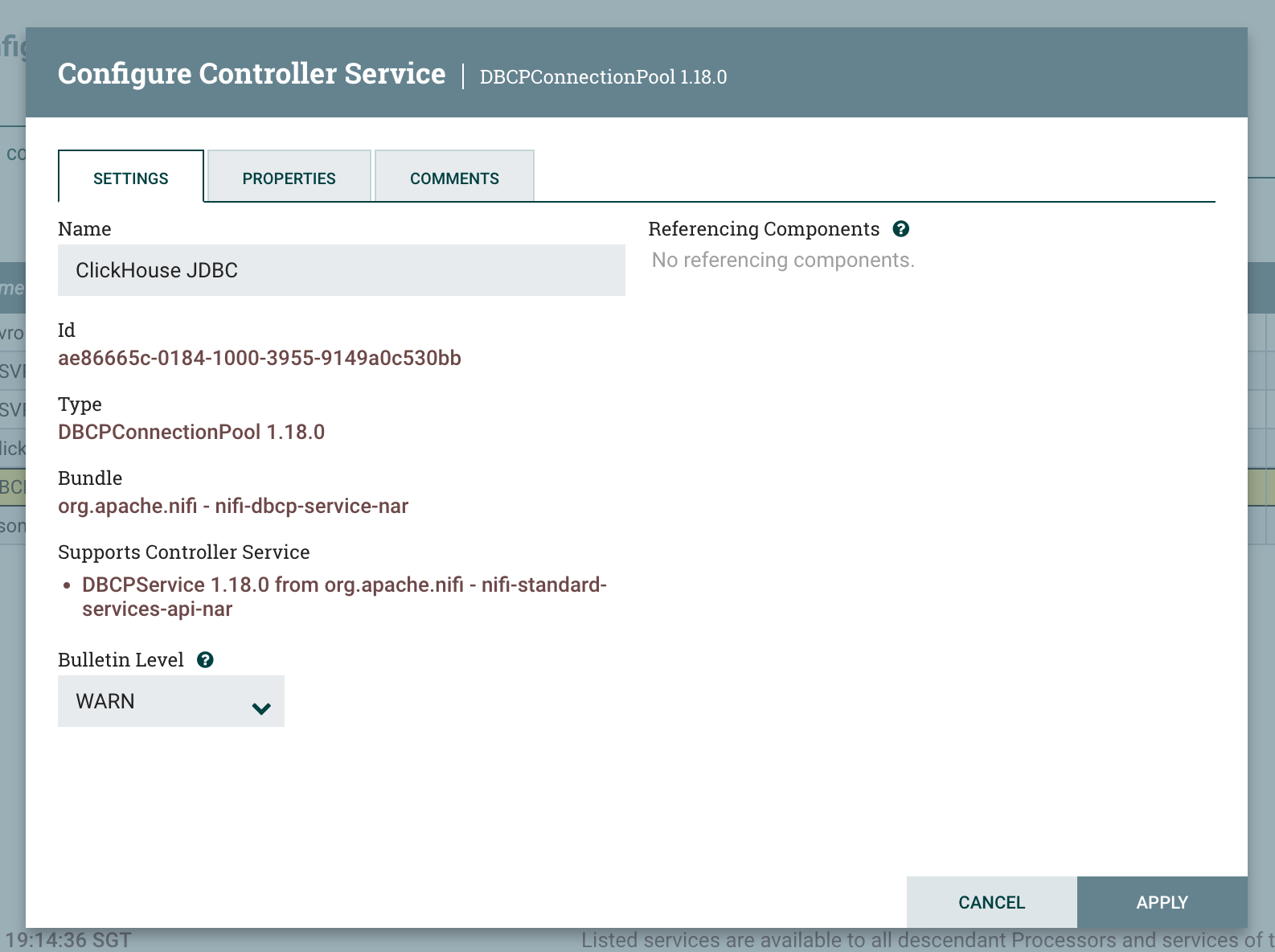

Property Value Remark Database Connection URL jdbc:ch:https://HOSTNAME:8443/default?ssl=true Replace HOSTNAME in the connection URL accordingly Database Driver Class Name com.clickhouse.jdbc.ClickHouseDriver Database Driver Location(s) /etc/nifi/nifi-X.XX.X/lib/clickhouse-jdbc-0.X.X-patchXX-shaded.jar Absolute path to the ClickHouse JDBC driver JAR file Database User default ClickHouse username Password password ClickHouse password In the Settings section, change the name of the Controller Service to "ClickHouse JDBC" for easy reference

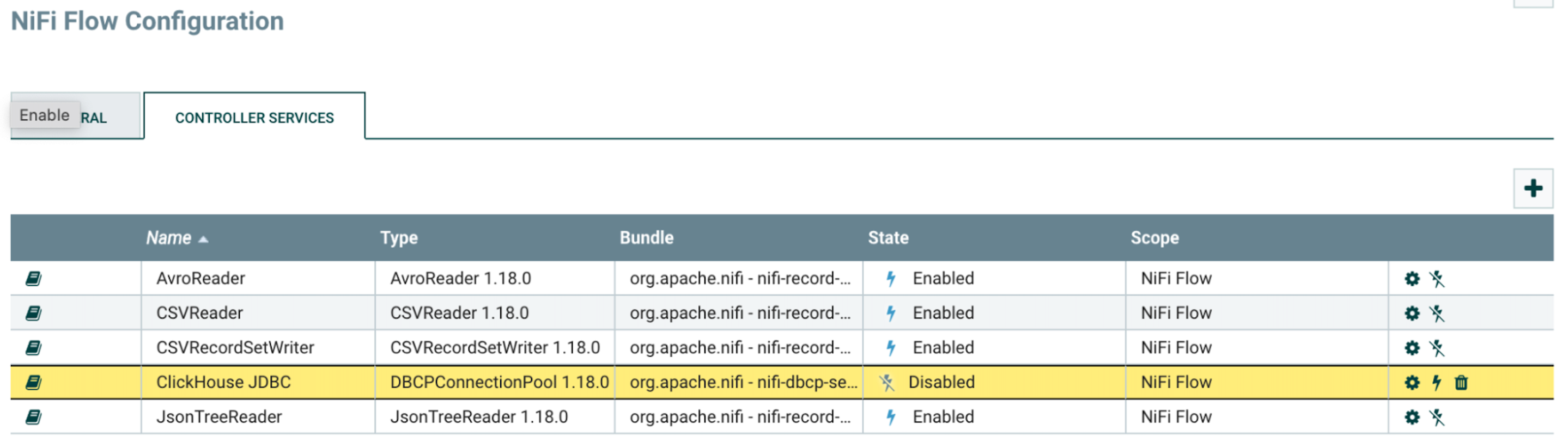

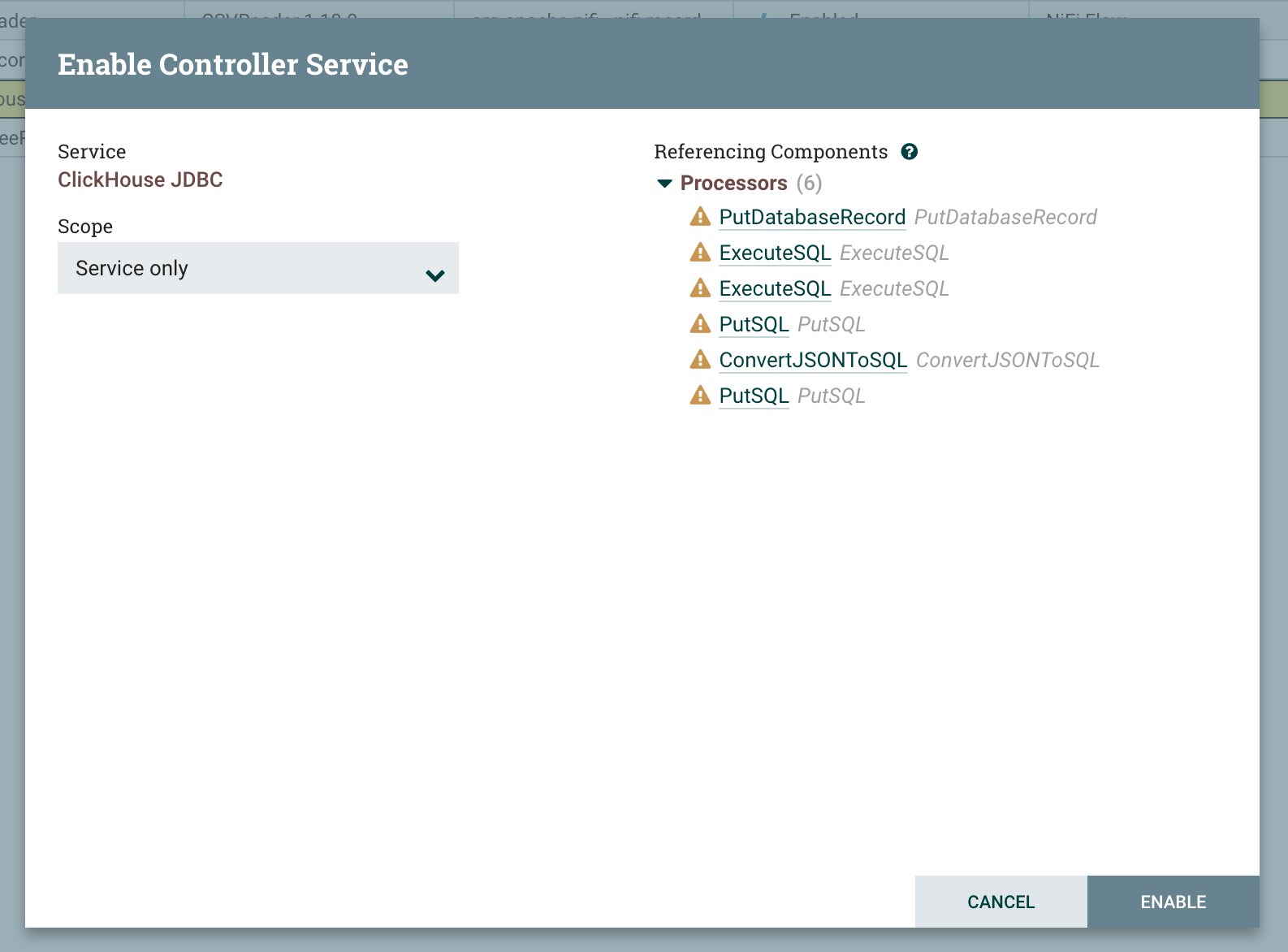

Activate the DBCPConnectionPool Controller Service by clicking on the "lightning" button and then the "Enable" button

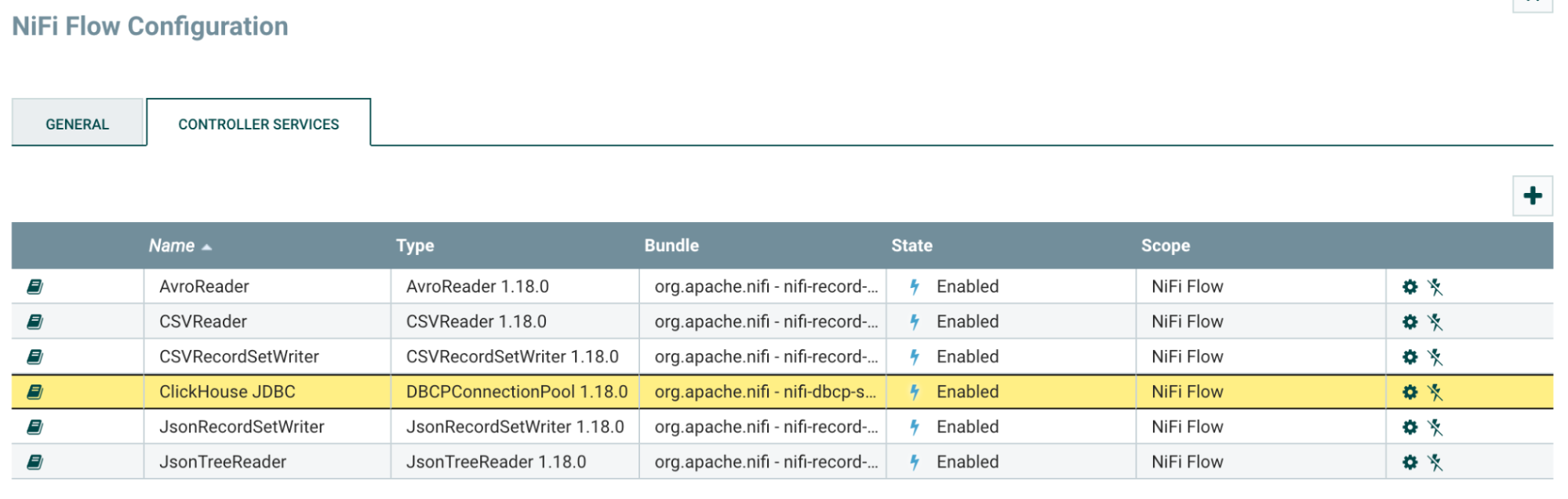

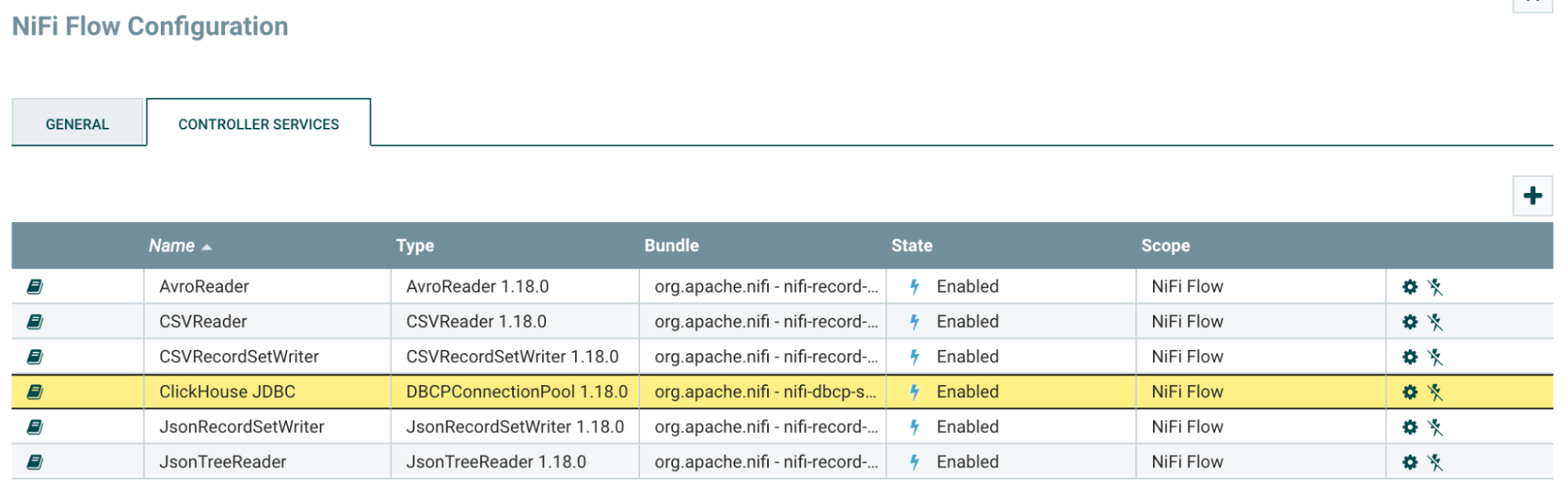

Check the Controller Services tab and ensure that the Controller Service is enabled

5. Read from a table using the ExecuteSQL processor

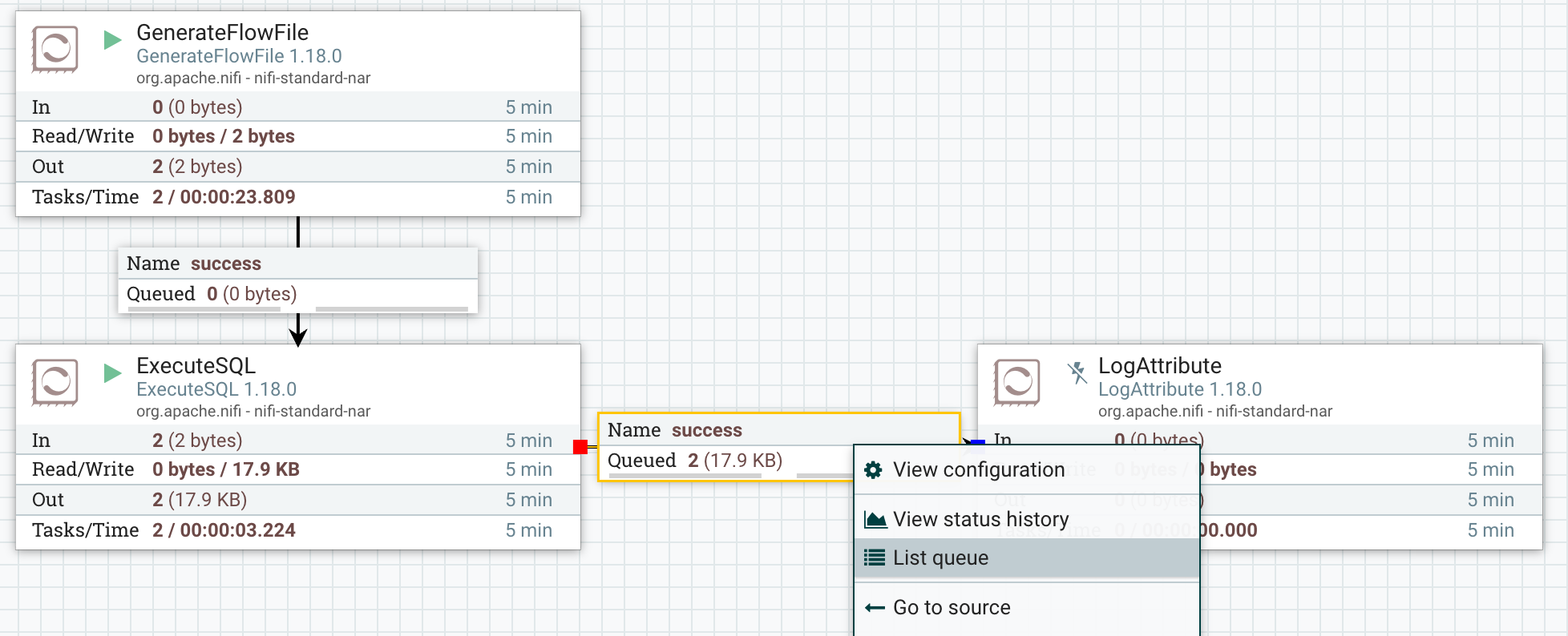

Add an ExecuteSQL processor, along with the appropriate upstream and downstream processors

Under the "Properties" section of the ExecuteSQL processor, input the following values

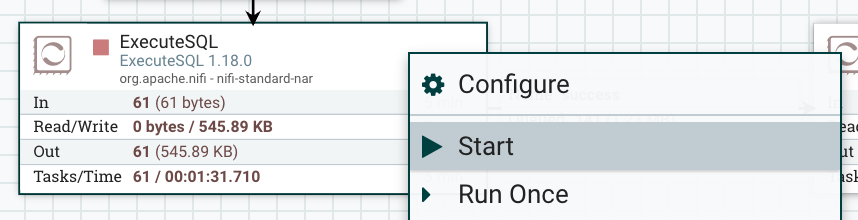

Property Value Remark Database Connection Pooling Service ClickHouse JDBC Select the Controller Service configured for ClickHouse SQL select query SELECT * FROM system.metrics Input your query here Start the ExecuteSQL processor

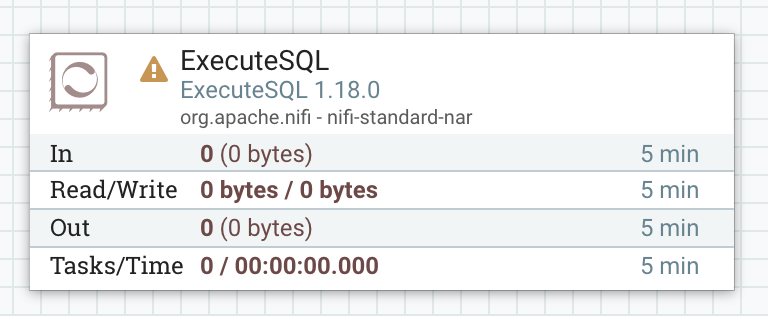

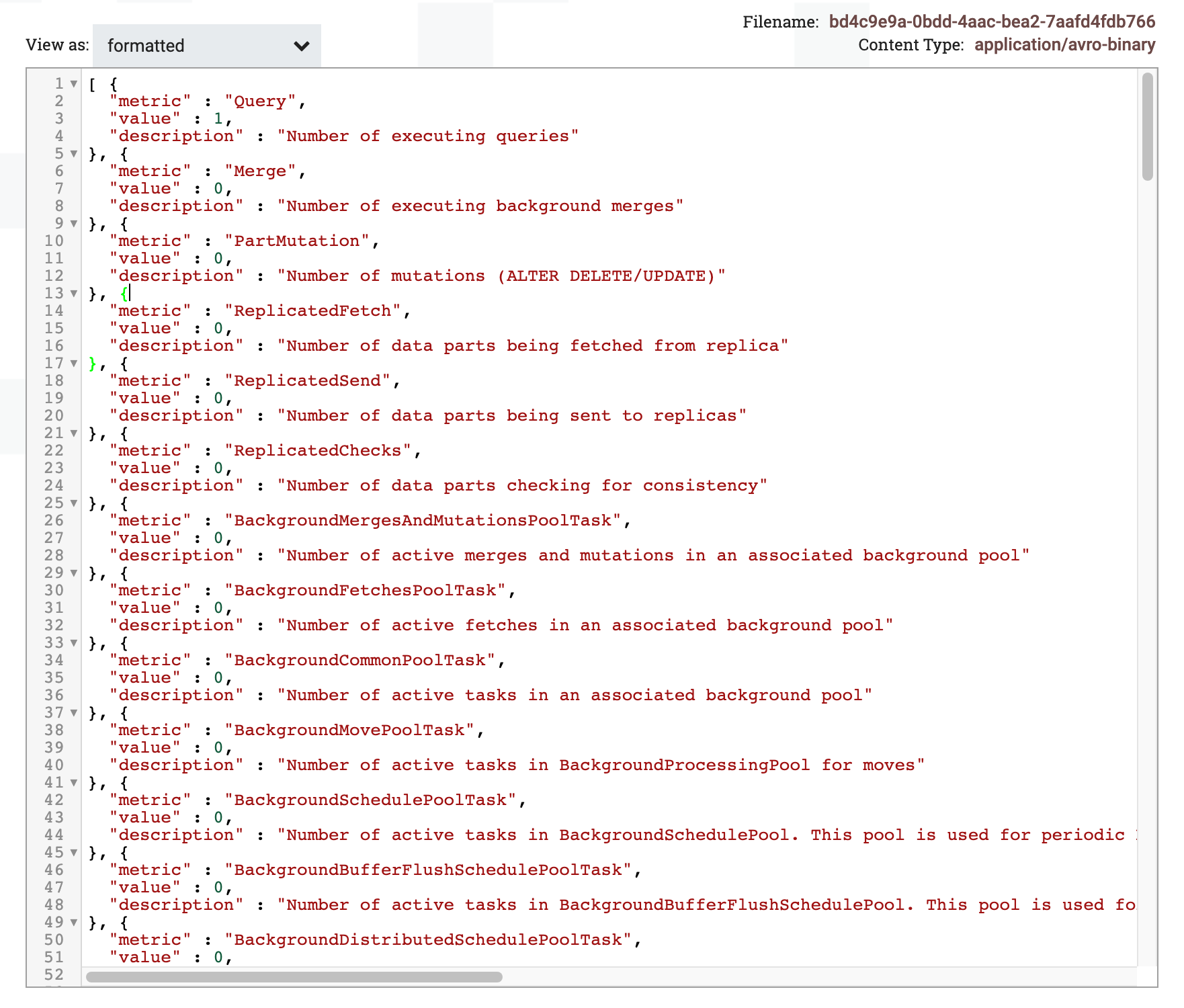

To confirm that the query has been processed successfully, inspect one of the FlowFile in the output queue

Switch view to "formatted" to view the result of the output FlowFile

6. Write to a table using MergeRecord and PutDatabaseRecord processor

To write multiple rows in a single insert, we first need to merge multiple records into a single record. This can be done using the MergeRecord processor

Under the "Properties" section of the MergeRecord processor, input the following values

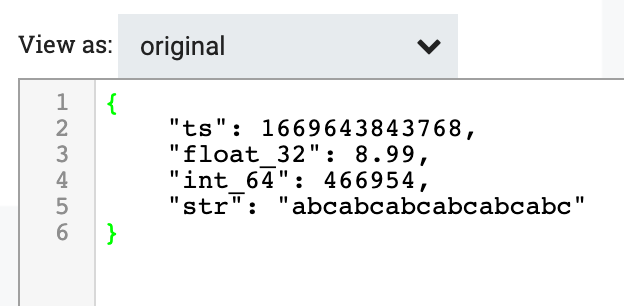

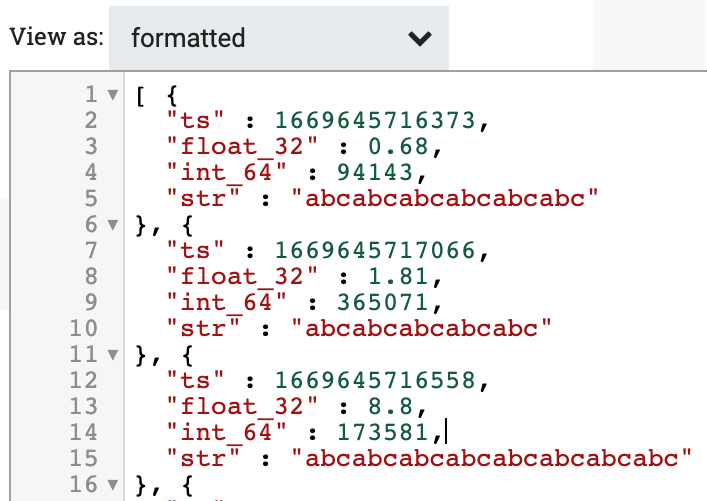

Property Value Remark Record Reader JSONTreeReader Select the appropriate record reader Record Writer JSONReadSetWriter Select the appropriate record writer Minimum Number of Records 1000 Change this to a higher number so that the minimum number of rows are merged to form a single record. Default to 1 row Maximum Number of Records 10000 Change this to a higher number than "Minimum Number of Records". Default to 1,000 rows To confirm that multiple records are merged into one, examine the input and output of the MergeRecord processor. Note that the output is an array of multiple input records

Input

Ouput

Under the "Properties" section of the PutDatabaseRecord processor, input the following values

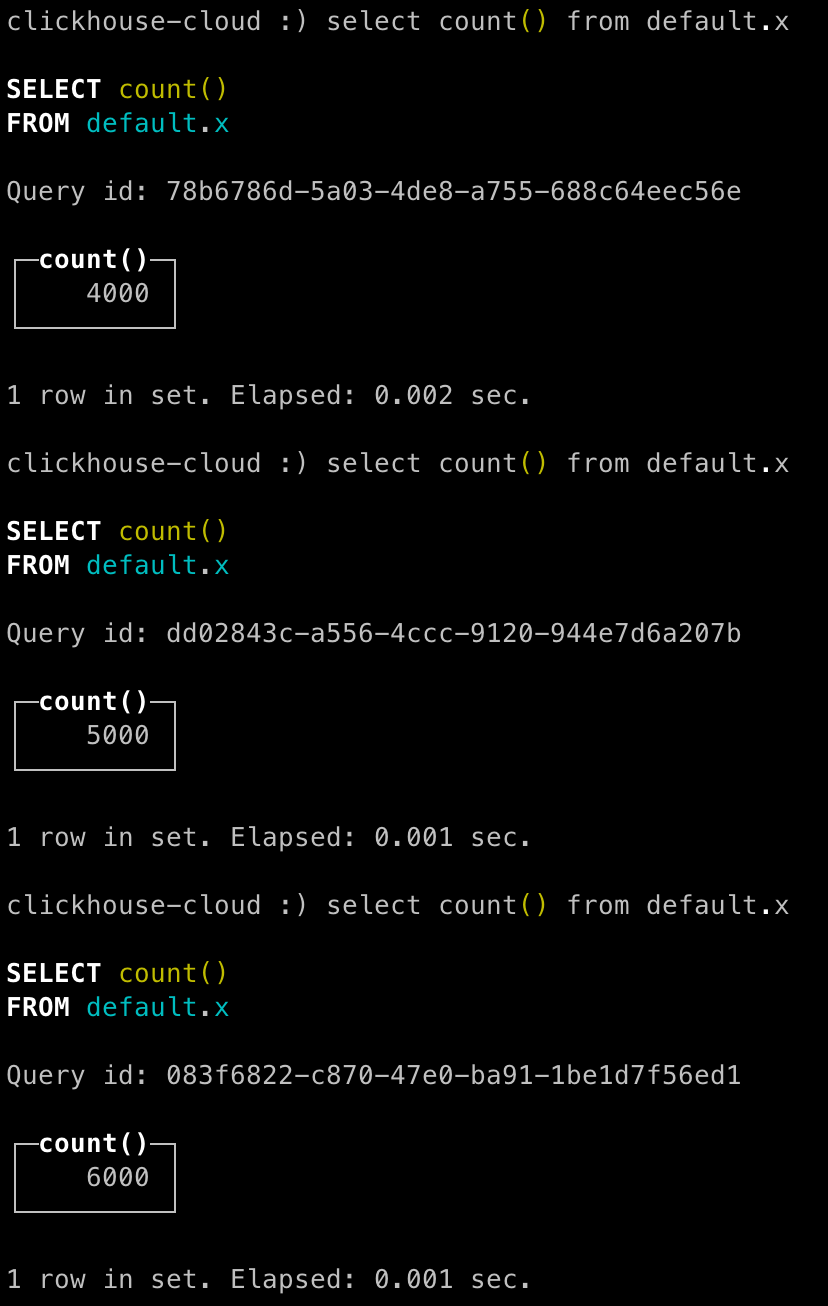

Property Value Remark Record Reader JSONTreeReader Select the appropriate record reader Database Type Generic Leave as default Statement Type INSERT Database Connection Pooling Service ClickHouse JDBC Select the ClickHouse controller service Table Name tbl Input your table name here Translate Field Names false Set to "false" so that field names inserted must match the column name Maximum Batch Size 1000 Maximum number of rows per insert. This value should not be lower than the value of "Minimum Number of Records" in MergeRecord processor To confirm that each insert contains multiple rows, check that the row count in the table is incrementing by at least the value of "Minimum Number of Records" defined in MergeRecord.

Congratulations - you have successfully loaded your data into ClickHouse using Apache Nifi!